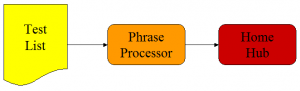

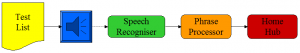

As the name suggests, the Phrase Processor processes a phrase and returns a response. If the phrase is a call to action, then the action is performed and a confirmation forms the response. Here is a block diagram.

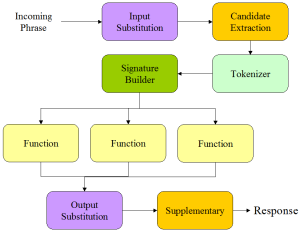

The real work is performed by the Functions, which talk to the hub to find out the current kitchen temperature, or to switch on the heating, etc.

The Input Substitution converts everyday terms into terms the hub will understand e.g. rain > precipitation, etc. Similarly, the Output Substitution can change words that are not pronounced well by the Text-To-Speech component e.g. Beaufort > Bofort.

Candidate Extraction involves splitting a phrase into likely candidate terms. For example, we may have a zone called Bedroom 1, so if we were to utter the phrase ‘what is the humidity in bedroom 1‘, our tokenizer might spot humidity as a measurand, but might not recognise bedroom 1 as a zone. By using a word count of 2, we can extract the following candidate terms: what, what is, is the, .. , bedroom 1 and we have a fighting chance our zone will be spotted.

The Tokenizer takes candidate terms and looks them up in lists of terms. Term lists are read from the hub: sensor names, zone names, actuator names, measurands, etc. or derived from pre-configured command lists. It then generates a set of tokens which provides automatic de-duplication.

The Signature Builder converts the set of tokens into an ordered function signature and marshals the parameters. So taking our example from above, the tokenizer would have generated a humidity measurand token and bedroom-1 zone token. These would be converted into the measurand_zone( x, y ) function signature, and the function would be called. This approach allows us to utter the reverse phrase ‘bedroom 1 humidity’ and still have it handled by the same function.

Specific sensors can be configured to report supplementary information for certain value ranges. For example, wind speed in mph can be supplemented with a familiar description e.g. 40 mph plus gale, 8 on the Beaufort scale.

This covers the structure of the Phrase Processor. In the next post we will address minor changes we need to make to the home-hub to be able to implement the REST interface.