Once you have your BME280 sensor assembled you need to fetch the python scripts to talk to it, but first we need to prepare the pi to enable the serial interface for this purpose.

By default, the serial interface is available for console login, so this needs to be disabled.

sudo raspi-config

choose option 5 – Interfacing Options, and option P6 – Serial.

Would you like a login shell to be accessible over serial? Answer No.

Would you like the serial port hardware to be enabled? Answer Yes.

Exit raspi-config, but don’t reboot just yet as we have a number of other changes to make first.

Disable the getty service:

sudo systemctl disable serial-getty@ttyAMA0.service

Install python serial…

sudo apt-get install python-serial

Add the following files to the controller…

wget -nH -x --cut-dirs=3 -P /usr/local/bin/code/controller/ -i /usr/local/bin/code/controller/manifest6.txt http://www.warrensoft.co.uk/home-hub/manifests/controller/manifest6.txt

uncomment the bme280 sensor in /sensor_helpers/__init__.py

and reboot the pi.

You can now check the serial port is available with the following:

ls -l /dev

and you should see the following alias:

serial0 -> ttyAMA0

Now it should be safe to connect your sensor module to the pi. If you do this before completing the configuration you may have connection issues, as the pi is interpreting your module as a login. Note that the Pi TXD (BCM 14) connects to the modules Rx line and Pi RXD (BCM 15) connects to the modules Tx line. The remaining connections are just 3v3 Power and Ground. I recommend you shutdown and power-down to make these connections.

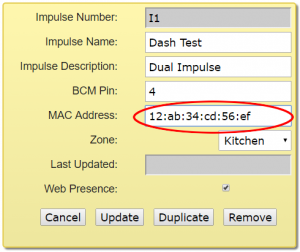

Add a new sensor using the website Organisation page, and set the sensor function to:

bme280./dev/serial0.4800.0

This function is constructed from 4 parts:

helper . serial-port . baudrate . channel

The serial port is /dev/serial0 which is an alias to the ttyAMA0 port we are connected to.

The baud rate is fixed in the picaxe program, at 4800, which works well in my setup.

The channel is a value of 0 – Temperature, 1 – Atmospheric Pressure or 2 – Relative Humidity.

With your new sensor configured you should be all set to take readings.

In the next post I will cover interfacing to the Amazon Dash button.